Artificial intelligence is reshaping the global economy. It is also driving a surge in energy demand and triggering a global rush to build data centers at unprecedented scale. But inside data centers, not all AI workloads are the same, a distinction that carries significant implications for the future of the industry.

Where AI Meets the Real World

AI workloads fall into two main categories: training and inference. Training is where models learn by analyzing large datasets to identify patterns, relationships, and logic. It’s resource-intensive and typically happens in large, centralized data centers. Inference is what happens when the trained model is applied to real-world tasks. Anytime AI responds to a prompt, that is inference at work.

Inference is the part of AI most people interact with directly. It is the workload that powers search results, voice assistants, and emerging agentic systems that operate autonomously. As new applications are developed and AI’s user base expands, the volume of inference requests continues to grow rapidly. This shift makes inference increasingly important for AI operators in the coming years. McKinsey projects that inference will become the dominant AI workload by 2030. Meanwhile, Grand View Research expects the global market for AI inference to more than double in that same span, growing from $113.5 billion in 2025 to $253.75 billion.

.avif)

The Coming Shift for AI Infrastructure

The rise of inference is pushing AI infrastructure in a new direction. As demand increases, we need more places to run these workloads and more power to support them. Growing adoption is projected to drive a 255% global increase in AI data center power demand by 2030. Yet most of today’s infrastructure was built for training, which involves long, compute-heavy jobs running in large, centralized facilities. Inference operates differently. As inference continues to scale, the contrast between training-optimized and inference-optimized infrastructure becomes harder to ignore.

.avif)

Meeting the demands of inference at scale requires a different approach to infrastructure. It means designing systems that can optimize for cost, speed, reliability, and security while adapting to volatility in workload demand. We believe these priorities will drive a shift toward decentralized edge data centers.

- Cost: Every inference has a measurable cost in energy and compute. That cost is often expressed as cost per token, the fundamental unit of data a model processes. Lowering this cost determines how much intelligence an enterprise can afford to generate. Locating inference near low-cost power and optimizing hardware utilization are among the main levers for achieving that efficiency.

- Speed: Running inference closer to where data is generated cuts latency drastically, essential for applications like defense and healthcare.

- Reliability: Localized models provide 30% more contextual value than global models, outperforming in applications such as education, legal compliance, and public services. Edge systems can also sustain operations amid network or cloud outages.

- Security: Keeping data at the edge minimizes the risk of exposing private data to external networks, essential for governments, enterprises, and many other sectors that handle sensitive information.

- Volatility: Inference workloads can be highly variable and unpredictable. As users seek immediate insights, demand can shift rapidly, causing sudden spikes or drops in energy use.

The unpredictable and inflexible nature of inference creates challenges integrating it into the grid. A Duke University study found that if workloads were designed to be more flexible, the current U.S. grid could already meet most of the projected increase in AI data center demand through 2029. The issue isn’t energy scarcity, but coordination. Unlocking the full potential of today’s grid will require smarter infrastructure that can match compute workloads to available energy in real time.

Infrastructure that is modular and energy-aware will play a significant role in the next era of compute. This is where MARA sees a strategic advantage. When energy and compute can be coordinated under one platform, cost per token goes down and utilization goes up. By owning both power and compute, MARA turns energy from a constraint into an advantage that supports inference at scale.

MARA’s Vision: A Digital Infrastructure Platform Built for Value & Intelligence

“Our mission is to harness massive volumes of low-cost power and channel them toward their most productive use cases, whether that be Bitcoin mining where load flexibility is key, or AI where lowest cost per token is key.” - Fred Thiel, Q3 2025 Shareholder Letter

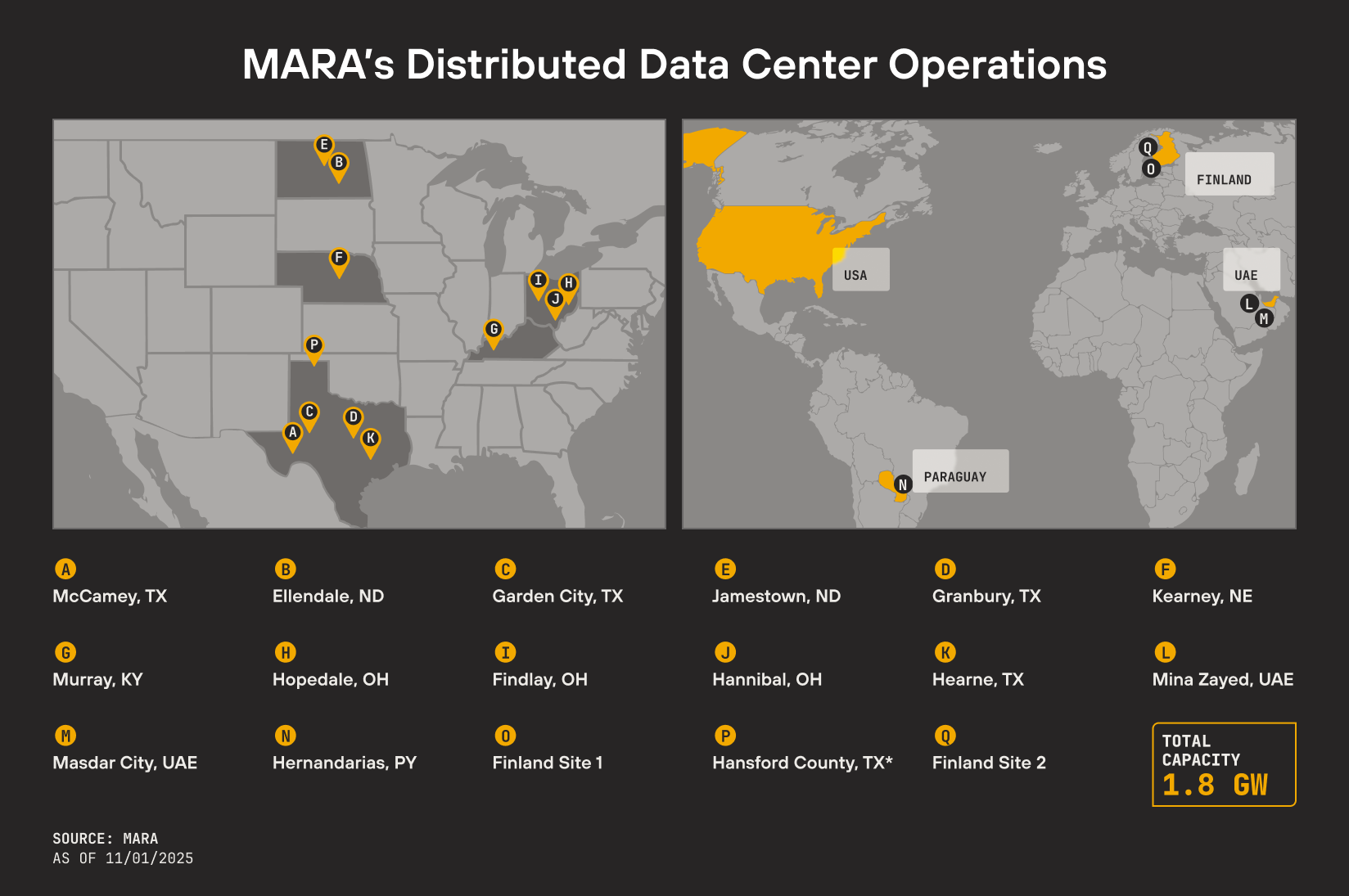

MARA’s infrastructure is well-suited for the future of AI inference. Our platform was built for high-efficiency compute, with a footprint that includes low-cost wind and gas-to-power sites at the edge. We already run these systems with flexibility, shifting workloads to use low-cost energy or give power back to the grid when energy demand is high. With 1.8 gigawatts of total capacity under control and growing, we believe the same power that fuels Bitcoin mining is equally capable of supporting inference at scale.

While peers are retrofitting existing sites to lease GPUs for AI training workloads, we are prioritizing inference, as we believe this is where we can create the most value. We are designing purpose-built, modular inference facilities that are integrated directly with low-cost power. This infrastructure is engineered for the next generation of intelligent compute. Our deployment of AI inference racks at the Granbury, Texas data center marks a clear step in that direction.

MARA’s investment agreement in Exaion and our announced collaboration with MPLX strengthen both sides of our platform: power and compute. Our collaboration with MPLX anchors our strategy on the energy side by integrating low-cost natural gas generation directly into new data center campuses. For compute, our investment agreement to acquire a majority stake in Exaion provides enterprise-grade expertise in AI infrastructure, secure cloud, and inference. These efforts accelerate our goal to deploy secure, efficient, AI-optimized data centers at scale.

Our inference strategy spans the spectrum of infrastructure, from large-scale, energy-optimized sites like in Texas to highly localized, secure environments such as Exaion's in Europe. At one end, MARA’s sites are engineered for scale, efficiency, and the lowest cost per token; at the other, Exaion’s deployments bring compute closer to the customer, prioritizing trust, compliance, and data locality.

From Energy to Intelligence: What Comes Next

“We believe electrons are the new oil, and energy is becoming the defining resource of the digital economy.” - Fred Thiel, Q3 2025 Shareholder Letter

AI marks the next phase of monetizing energy. Every unit of power will soon be measured not just in megawatts generated, but in intelligence produced. MARA is building a platform where energy, compute, and flexibility work together to power intelligence. The future of infrastructure isn’t centralized or speculative. It’s intelligent, distributed, and already under construction.

Read about MARA’s investment agreement to acquire a majority stake in AI firm Exaion here.

Read about MARA’s partnership with MPLX, a subsidiary of Marathon Petroleum Corporation here.

.avif)